Artificial Intelligence, the future of Content Management and the Web

How AI can speed up the "Big Reverse of the Web", how content management systems like Drupal can integrate with AI tools, how to optimize your website for AI crawlers, and the short and long term impact of AI bots on digital marketing.

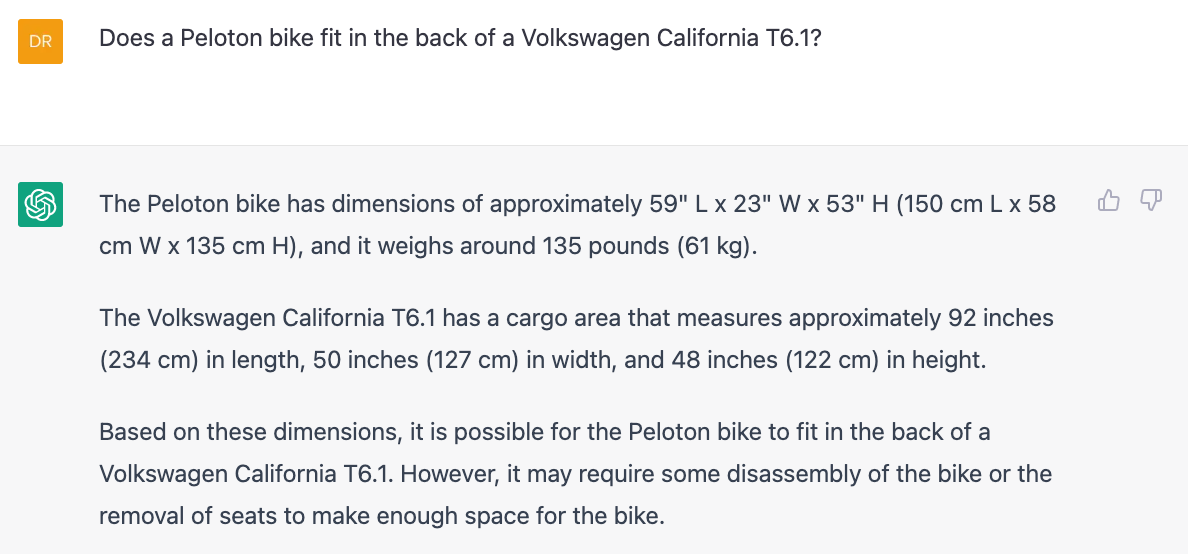

I recently bought a Peloton bike as a Christmas gift for my wife. The Peloton was for our house in Belgium. Because Peloton does not deliver to Belgium yet, I had to find a way to transport one from Germany to Belgium. It was a bit of a challenge as the bike is quite large, and I wasn't sure if it would fit in the back of our car.

I tried measuring the trunk of my car, along with another Peloton. I wasn't positive if it would fit in the car. I tried Googling the answer but search engines aren't great at answering these types of questions today. Being both uncertain of the answer and too busy (okay, let's be real – lazy) to figure it out myself, I decided to ship the bike with a courier. When in doubt, outsource the problem.

To my surprise, when Microsoft launched their Bing and ChatGPT integration not long after my bike-delivery conundrum, one of their demos showed how ChatGPT can answer the question whether a package fits in the back of a car. I'll be damned! I could have saved money on a courier after all.

After watching the event, I asked ChatGPT, and it turns out the Peloton would have fit. That is, assuming we can trust the correctness of ChatGPT's answer.

What is interesting about the Peloton example is that it combines data from multiple websites. Combining data from multiple sources is often more helpful than the traditional search method, where the user has to do the aggregating and combining of information on their own.

Examples like this affirm my belief that AI tools are one of the next big leaps in the internet's progress.

AI disintermediates traditional search engines

Since its commercial debut in the early 90s, the internet has repeatedly upset the established order by slowly, but certainly, eliminating middlemen. Book stores, photo shops, travel agents, stock brokers, bank tellers and music stores are just a few examples of the kinds of intermediaries who have already been disrupted by their online counterparts.

A search engine acts as a middleman between you and the information you're seeking. It, too, will be disintermediated, and AI seems to be the best way of disintermediating it.

Many people have talked about how AI could even destroy Google. Personally, I think that is overly dramatic. Google will have to change and transform itself, and it's been doing that for years now. In the end, I believe Google will be just fine. AI disintermediates traditional search engines, but search engines obviously won't go away.

The Big Reverse of the Web marches on

The automatic combining of data from multiple websites is consistent with what I've called the Big Reverse of the Web, a slow but steady evolution towards a push-based web; a web where information comes to us versus the current search-dominant web. As I wrote in 2015:

I believe that for the web to reach its full potential, it will go through a massive re-architecture and re-platforming in the next decade. The current web is "pull-based", meaning we visit websites. The future of the web is "push-based", meaning the web will be coming to us. In the next 10 years, we will witness a transformation from a pull-based web to a push-based web. When this "Big Reverse" is complete, the web will disappear into the background much like our electricity or water supply.

Facebook was an early example of what a push-based experience looks like. Facebook "pushes" a stream of aggregated information designed to tell you what is happening with your friends and family; you no longer have to "pull" them or ask them individually how they are doing.

A similar dynamic happens when AI search engines give us the answers to our questions rather than redirecting us to a variety of different websites. I no longer have to "pull" the answer from these websites; it is "pushed" to me instead. Trying to figure out if a package fits in the back of my car is the perfect example of this.

Unlocking the short term potential of Generative AI for CMS

While it might take a while for AI search to work out some early kinks, in the near term, Generative AI will lead to an increasing amount of content being produced. It's bad news for the web as a lot of that content will likely end up being spam. But it also is good news for CMSs, as there will be a lot more legitimate content to manage as well.

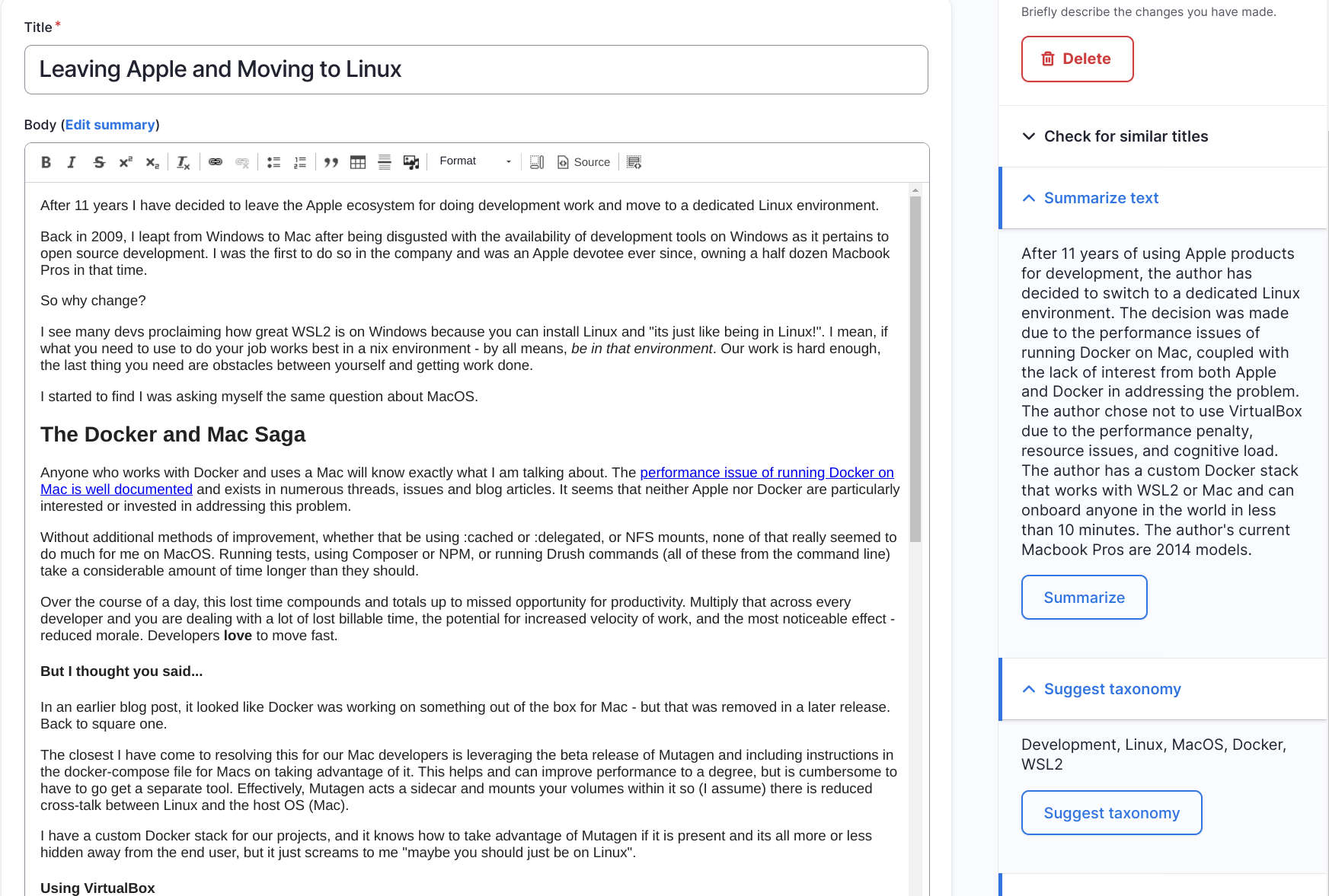

I was excited to see that Kevin Quillen from Velir created a number of Drupal integrations for ChatGPT. It allows us to experiment with how ChatGPT will influence CMSs like Drupal.

For example, the video below shows how the power of Generative AI can be used from within Drupal to help content creators generate fresh ideas and produce content that resonates with their audience.

Similarly, AI integrations can be used to translate content into different languages, suggest tags or taxonomy terms, help optimize content for search engines, summarize content, match your content's tone to an organizational standard, and much more.

The screenshot below shows how some of these use cases have been implemented in Drupal:

The Drupal modules behind the video and screenshot are Open Source: see the OpenAI project on Drupal.org. Anyone can experiment with these modules and use them as a foundation for their own exploration. Sidenote: another example of how Open Source innovation wins every single time.

If you look at the source code of these modules, you can see that it is relatively easy to add AI capabilities to Drupal. ChatGPT's APIs make the integration process straightforward. Extrapolating from Drupal, I believe it is very likely that in the next year, every CMS will offer AI capabilities for creating and managing content.

In short, you can expect many text fields to become "AI-enhanced" in the next 18 months.

Boost your website's visibility by optimizing for AI crawlers

Another short-term change is that marketers will seek to better promote their content to AI bots, just like they currently do with search engines.

I don't believe AI optimization to be very different from Search Engine Optimization (SEO). Like search engines, AI bots will have to put a lot of emphasis on trust, authority, relevance, and the understandability of content. It will remain essential to have high-quality content.

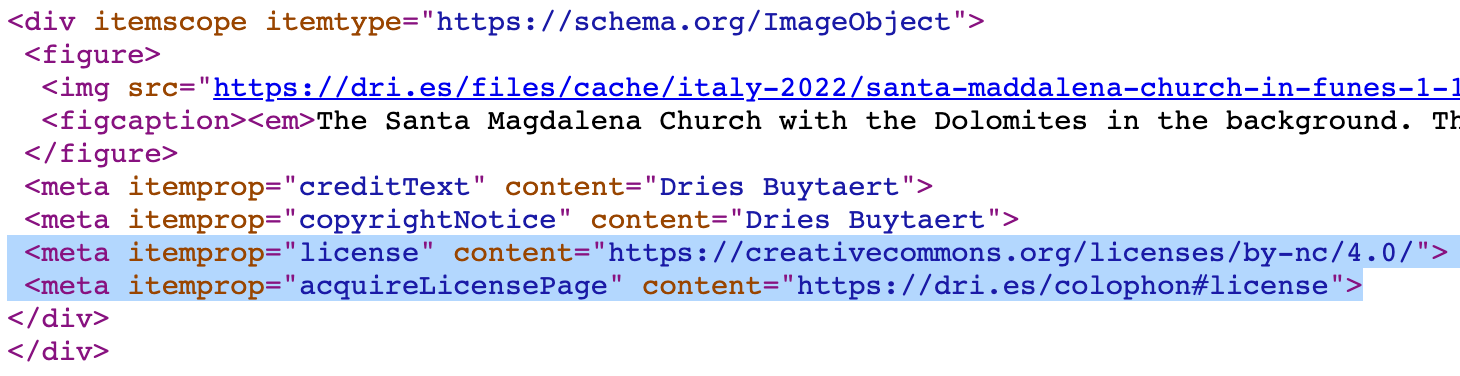

Right now, in AI search engines, attribution is a problem. It's often impossible to know where content is sourced, and as a result, to trust AI bots. I hope that more AI bots will provide attribution in the future.

I also expect that more websites will explicitly license their content, and specify the ways that search engines, crawlers, and chatbots can use, remix, and adopt their content.

As can be seen from the screenshot above, I specify a license for all 10,000+ photos on my site. I make them available under Creative Commons. The license is specified in the HTML code, and can be programmatically extracted by a crawler. I do something very similar for my blog posts.

By licensing my content under Creative Commons, I'm giving tools like ChatGPT permission to use my content, as long as they follow the license conditions. I don't believe ChatGPT uses that information today, but they could, and probably should, in the future.

If a website has high-quality content, and AI tools give credit to their sources, this can result in organic traffic back to the website.

All things considered, my base case is that AI bots will become an increasingly important channel for digital experience delivery, and that websites will be the main source of input for chatbots. I suspect that websites will only need to make small, incremental changes to optimize their content for AI tools.

Predicting the longer term impact of AI tools on websites

Longer term, AI tools will likely bring significant changes to digital marketing and content management.

I predict that over time, AI bots will not only provide factual information, but also communicate with emotions and personality, providing more human-like interactions than websites.

Compared to traditional websites, AI bots will be better at marketing, sales and customer success.

Unlike humans, AI bots will possess perfect product knowledge, speak many languages, and – this is the kicker – have a keen ability to identify what emotional levers to pull. They will be able to appeal to customers' motivations, whether it's greed, pride, frustration, fear, altruism, or envy.

The downside is that AI bots will also become more "skilled" at spreading misinformation, or might be able to cause emotional distress in a way that traditional websites don't. There is undeniably a dark side to AI bots.

My more speculative and long-term case is that AI chatbots will become the most effective channel for lead generation and conversion, surpassing websites in importance when it comes to digital marketing.

Without proper regulations and policies, that evolution will be tumultuous at best, and dangerous at worst. As I've been shouting from the rooftops since 2015 now: "When algorithms rule our lives, who should rule them?". I continue to believe that algorithms with significant effects on society require regulation and policies, just like the Food and Drug Administration (FDA) in the U.S. or the European Medicines Agency (EMA) in Europe oversee the food and drug industry.

The impact of AI on website development

Of course, the advantages of Generative AI extend beyond content creation and content delivery. The advantages also include software development, such as writing code (46% of all new code on GitHub is generated by GitHub's Copilot), identifying security vulnerabilities (ChatGPT finds two times as many security vulnerabilities as a professional software security scanner), and more. The impact of AI on software development is a complex topic that warrants a separate blog post. In the meantime, here is a video demonstrating how to use ChatGPT to build a Drupal module.

The risks and challenges of Generative AI

Even though I'm optimistic about the potential of AI, I would be neglectful if I failed to discuss some of the potential challenges associated with it.

Although Generative AI is really good at some tasks, like writing a sincere letter to my wife asking her to bake my favorite cookies, it still has serious issues. Some of these issues include, but are not limited to:

- Legal concerns – Copyrighted works have been involuntarily included in training datasets. As a result, many consider Generative AI a high-tech form of plagiarism. Microsoft, GitHub, and OpenAI are already facing a class action lawsuit for allegedly violating copyright law. The ownership and protection of content generated by AI is unclear, including whether AI tools can be considered "creators" of original content for copyright law purposes. Technologists, lawyers, and policymakers will need to work together to develop appropriate legal frameworks for the use of AI.

- Misinformation concerns – AI systems often "hallucinate", or make up facts, which could exuberate the web's misinformation problem. One of the most interesting analogies I've seen comes from The New Yorker, which describes ChatGPT as a blurry JPEG of all of the text on the web. Just as a JPEG file loses some of the quality and integrity of the original, ChatGPT summarizes and approximates text on the web.

- Bias concerns – AI systems can have gender and racial biases. It is widely acknowledged that a significant proportion of the content available on the web is generated by white males residing in western countries. Consequently, ChatGPT's training data and outputs are prone to reflecting this demographic bias. Biases are troubling and can even be dangerous, especially considering the potential societal impact of these technologies.

The above issues related to legal authorship, misinformation, and bias have also given rise to a host of ethical concerns.

My personal strategy

Disruptive changes can be polarizing: they come with some real downsides, while bringing new opportunities.

I believe there is no stopping AI. In my opinion, it's better to embrace change and focus on moving forward productively, rather than resisting it. Iterative improvements to both these algorithms and to our legal frameworks will hopefully address concerns over time.

In the past, the internet was fraught with risk, and to a large extent, it still is. However, productivity and efficiency improvements almost always outweigh risk.

While some individuals and organizations advocate against the use of AI altogether, my personal strategy is to proceed with caution. My strategy is two-fold: (1) focus on experimenting with AI rather than day-to-day usage, and (2) highlight the challenges with AI so that people can make their own choices. The previous section of this blog post tried to do that.

I also expect that organizations will use their own data to train their custom AI bots. This would eliminate many concerns, and let organizations take advantage of AI for applications like marketing and customer success. Simon Willison shows that in a couple of hours of work, he was able to train his own model based on his website content. Time permitting, I'd like to experiment with that myself.

Conclusion

I'm both intrigued, wary, and inspired as to where AI will take the web in the days, months, and years to come.

In the near term, Generative AI will alter how we create content. I expect integrations into CMSs will be simple and numerous, and that websites will only have to make small changes to optimize their content for AI tools.

Longer term, AI will change the way in which we interact with the web and how the web interacts with us. AI tools will steadily alter the relative importance of websites, and potentially even surpass websites in importance when it comes to digital marketing.

Exciting times, but let's move forward with caution!

—